In April of 2018, I was delegate for Cloud Field Day 3. One of the presenters was NetApp, and they showed off a few different services they had under development in the cloud space. In a previous post I went over the services in some detail, so I won’t regurgitate all that now. One of the services that was still in private preview at the time was NetApp Files for Azure. The idea was relatively simple, NetApp would place their hardware in Azure datacenters and configure the hardware to support multi-tenancy and provisioning through the Azure Resource Manager. That solution is now generally available, and I was curious how it would perform in comparison with the other storage options for the Azure Kubernetes Service (AKS). In this post I will detail out my testing methodology, the performance results, and some thoughts on which storage makes the most sense for different workload types.

The process for getting access to Azure NetApp Files is a little more complicated than I would have liked. Most services on Azure are enabled by default, and you simply have to go through the resource creation process. In order to use NetApp Files, you first have to fill out a Microsoft Form, and wait for someone at NetApp to get back to you. They will want to know which subscriptions to enable the service for, and will also want to have a phone call to discuss your use case. I was… not interested in talking to a NetApp sales person. They did enable my subscription for the service, and then I had to go into Azure PowerShell and enable the Resource Provider for Microsoft.NetApp. The entire process took a couple of days, longer than I would have liked for a cloud service. I understand that they have limited capacity on the hardware in the various datacenters, so they don’t want people just spinning up NetApp capacity pools willy-nilly. At the same time, this is the cloud and I am impatient.

Regardless, I gained access to the service. There are three tiers of performance for NetApp Files: Standard, Premium, and Ultra. That follows the same categories as Azure Storage, which also exists as Standard, Premium, and Ultra. The process for consuming NetApp Files is to create a NetApp Files account, add a Capacity pool of the performance level you need, and then carve that capacity pool up into volumes. The volumes are exported to a dedicated subnet on an Azure Vnet. The process is very straightforward.

For my testing, I created a capacity pool for each of the three performance levels, and then exported a 1TB volume to an Azure Vnet that also had my running AKS cluster. My AKS cluster was a single worker node of size DS2_v2. There was nothing running on the cluster aside from the default pods and a tiller pod for Helm. I also wanted to compare the performance to the other storage options on AKS:

Azure Files uses the standard tier for performance. There is a Premium tier of Azure Files that is not yet available for consumption by AKS. There is also the aforementioned Ultra tier for Azure Disk, which also is not yet supported. I’m sure both are forthcoming, and perhaps I will update my post when that happens.

**Update:**As a couple commentators point out, Azure Files at the Premium SKU is supported with dynamic provisioning for AKS clusters running K8s version 1.13.0 and higher. I have run the performance tests against the Premium tier, and I will document the results in a separate post dealing exclusively with Azure File.

The performance testing is using the FIO project packaged into a container. I am following the guidance of this GitHub repository, except the container image is no longer available on Docker Hub. Someone else created an identical image based on the DockerFile, so I decided to use that. My tests are stored on my own GitHub repository for your reference if you wanted to recreate the performance testing for yourself. I tried presenting the NFS storage from NetApp using both the NFS-Client provisioner deployed using Helm and also the more direct process of creating a persistent volume with an NFS server and mount specified. From a performance standpoint, they both appeared to be identical.

For the dbench tests I used the following settings:

env:

- name: DBENCH_MOUNTPOINT

value: /data

- name: DBENCH_QUICK

value: "no"

- name: FIO_SIZE

value: 1G

- name: FIO_OFFSET_INCREMENT

value: 256M

- name: FIO_DIRECT

value: "1"

There are many possible variations for these settings, and I would encourage you to test based on an actual workload you plan to use. For me, I didn’t have a specific workload in mind, so I used these settings for all the different storage types. I ran the tests one at a time without anything else running on the cluster to prevent any potential contention for resources. Let’s check out the performance metrics for each test.

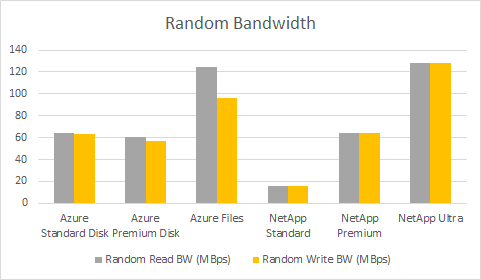

First let’s look at bandwidth for random and sequential workloads.

| Storage Type | Random Reads | Random Writes |

|---|---|---|

| Azure Standard Disk | 63.9 | 63.1 |

| Azure Premium Disk | 60.4 | 57.2 |

| Azure Files | 125 | 96.3 |

| NetApp Standard | 16.1 | 16 |

| NetApp Premium | 64.3 | 64.6 |

| NetApp Ultra | 128 | 128 |

There’s a couple surprising findings here. For starters, Azure Standard and Premium disk are essentially the same for both read and write bandwidth. The second big surprise is that Azure Files is as performant or close to the Ultra class of the NetApp files. If you’ve got a bandwidth hungry application on AKS, Azure Files appears to be the way to go.

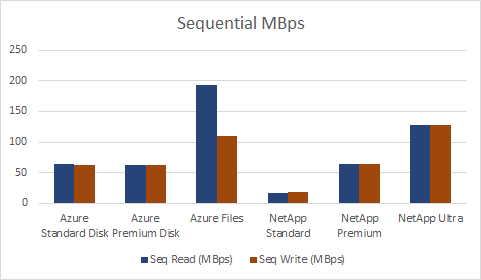

| Storage Type | Sequential Reads | Sequential Writes |

|---|---|---|

| Azure Standard Disk | 63.8 | 63.1 |

| Azure Premium Disk | 63.2 | 62.5 |

| Azure Files | 193 | 109 |

| NetApp Standard | 16.2 | 18.3 |

| NetApp Premium | 63.9 | 64.7 |

| NetApp Ultra | 128 | 128 |

Once again we’re seeing that Azure Standard and Premium disk have roughly the same performance levels, and Azure Files is outperforming both. The real kicker here is that Azure Files outperforms NetApp Files Ultra by a decent margin, 193MBps vs 109MBps. Whether your workload is random or sequential, Azure Files seems to be the way to go for bandwidth.

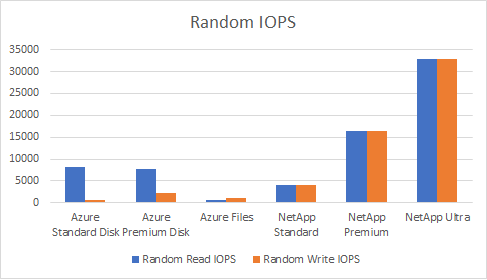

| Storage Type | Random Reads | Random Writes |

|---|---|---|

| Azure Standard Disk | 8159 | 555 |

| Azure Premium Disk | 7641 | 2306 |

| Azure Files | 735 | 1010 |

| NetApp Standard | 4094 | 4093 |

| NetApp Premium | 16400 | 16400 |

| NetApp Ultra | 32800 | 32900 |

While Azure Files may rule the roost for bandwidth, it doesn’t hold a candle to all the other storage types for IOPS. Azure Standard and Premium Disks seem to have roughly the same read IOPS, but Premium starts edging out Standard for writes, 2306 vs 555. The real standout in this case is NetApp Files. Standard (4096), Premium (16400), and Ultra (32900) are consistent on both Read and Write. While Standard is slightly below Azure Disk, the Premium and Ultra tiers dominate all other options. If you’ve got a workload that needs a ton of random IOPS, NetApp Files is the way to go.

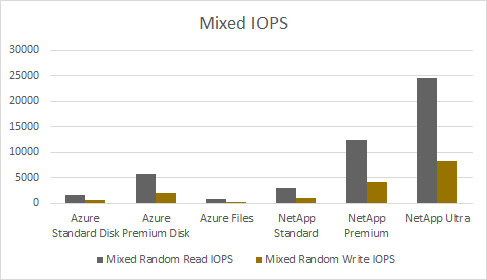

| Storage Type | Random Reads | Random Writes |

|---|---|---|

| Azure Standard Disk | 1670 | 558 |

| Azure Premium Disk | 5723 | 1915 |

| Azure Files | 770 | 257 |

| NetApp Standard | 3072 | 1018 |

| NetApp Premium | 12300 | 4100 |

| NetApp Ultra | 24600 | 8186 |

The picture for mixed IOPS is roughly the same, although in this case the Azure Premium Disk outperformed the Standard Disk by at least a factor of 3x. Azure Files was the worst with a meager 257 IOPS for mixed writes. The NetApp tiers once again shined, although this time the write IOPS were about a third of the read IOPS numbers. Still, if you need IOPS, NetApp Files is the way to go.

You might be curious what the difference is between the random and mixed IOPS tests. Looking at the docker-entrypoint.sh file in the dbench repo, the Random IOPS is made up of two separate runs - one for Read and one for Write. The Mixed IOPS is a single run that has a mix of read and write operations. The relevant setting in FIO is readwrite, which is set to randread, randwrite, and randrw respectively. The Random IOPS setting of randrw includes additional switch options to configure the mix of operations called rwmixread and rwmixwrite. The dbench setup uses a value of 75 for rwmixread which means that 75% of the operations will be read. You can check out the official docs to learn more about FIO.

In a nutshell, the Random IOPS tests are run separately, so each set of results is for read or write operations only. The Mixed IOPS test runs a balance of 75% read and 25% write in the same test run, giving you a nice blend.

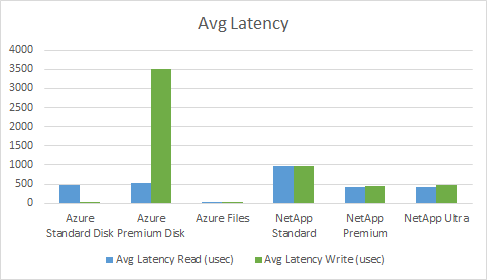

| Storage Type | Avg Latency Reads | Avg Latency Writes |

|---|---|---|

| Azure Standard Disk | 489 | 7.3 |

| Azure Premium Disk | 518.9 | 3511.7 |

| Azure Files | 22.5 | 5.1 |

| NetApp Standard | 973.4 | 973.5 |

| NetApp Premium | 416 | 463.4 |

| NetApp Ultra | 413.1 | 465 |

As opposed to the other metrics - where higher is better - latency is generally considered a bad thing. Lower latency, therefore, is better. And you can’t get much lower than the latency on Azure Files! The measurement here is in microseconds, abbreviated as usec. The latency for Azure Files is so low as to be suspicious, as if the information is somehow being cached locally and not actually reaching out to Azure Files at all. The other big standout here is that the average latency for Azure Premium Disk is so much higher than all the other storage types. There’s definetely something weird going on here, and it would bear further investigation, possibly as a separate blog post.

NetApp Files is what you might expect, not as good as Azure Disk at the Standard level and better than Azure Disk at the Premium and Ultra levels. Based on the graph, I would recommend Azure Files for latency, but with a grain of salt. There something that doesn’t quite sit right with all these numbers, and I need to find out what is really going on.

The point of this process was to compare some different storage options for AKS, and the results were somewhat surprising. If pure bandwidth is what your application craves, then Azure Files is probably the way to go. On the other hand, if pushing IOPS is your goal, then NetApp’s Premium and Ultra tiers have you well covered. In the world of latency it appears that Azure Files is the champion, but again I caution you to try it out for yourself. Another key finding is that there is not a ton of difference between Azure Standard and Premium disk. The IOPS for mixed workloads was better, but other than that it doesn’t make sense to pay the extra for Premium storage.

Vault Provider and Ephemeral Values

July 21, 2025

Write-only Arguments in Terraform

July 15, 2025

July 1, 2025

On HashiCorp, IBM, and Acceptance

March 3, 2025